How to download large files on Linux from the terminal

Are you tired of waiting forever for large files to download on Linux? The terminal has some powerful tools that can help you maximize your bandwidth.

Table of contents

wget is a free command line tool that downloads files from the terminal non-interactively; it can work in the background without needing the user to log on. Downloading from the browser may require the user's constant presence for downloads to finish, which is not always ideal when dealing with large file downloads.

Advantages of using wget

wgetis built to cater to slow and/or unstable networks. Thewgetutility retries when a download fails and supports downloading from where the last download failed (if the server the user is downloading from supports Range headers).Furthermore, the utility allows the use of proxy servers which can aid in faster retrievals and lighter network loads.

wgetsupports recursive downloads. A recursive download refers to the process of downloading a file and then using that file to download additional files, repeating this process until all necessary files have been downloaded. This is often used in downloading a website or other collection of files from the internet, where the initial file (e.g., a HTML page) contains links to other files (e.g., images, stylesheets, etc.) that are also needed to render the content fully. Therefore,wgetcan be tweaked to work as a website crawler.

- Last but not least,

wgetsupports downloads using the FTP, HTTP, and HTTPS protocols, all internet protocols used to transfer data between computers. FTP (File Transfer Protocol) is a standard network protocol that transfers files from one host to another over a TCP-based network. FTP allows clients to upload, download, and manage files on a remote server. HTTP (Hypertext Transfer Protocol) is a standard protocol for sending and receiving information on the World Wide Web. It is the foundation of data communication on the web and is used to transmit data from a server to a client, such as a web browser. HTTPS (HTTP Secure) is a variant of HTTP that uses a secure SSL/TLS connection to encrypt data sent between a server and a client. This makes it more difficult for someone to intercept and read the data as it is transmitted. HTTPS is commonly used to protect sensitive information, such as login credentials and financial transactions.

The wget comes installed on most Linux distributions. To install it on Debian-based distributions use:

sudo apt install wget

The wget syntax is:

wget [options] [URL]

wgetinvokes the wget utility[options]instruct wget on what to do with the URL provided. Some options have a long-form and short-form version.[URL]file or folder to be downloaded. This can also be a website link to download

Download Options

We will cover the following download options

Retrying when a connection fails.

Saving downloaded files with a different name.

Continuation of partially downloaded files.

Download to a specific folder.

Using proxies.

Limit download speeds.

Extracting from multiple URLs.

Mirror a webpage.

Extract entire websites.

Extract a website as if you were Googlebot.

Retrying when a connection fails.

By default

wgetretries 20 times except for fatal errors. Examples of fatal errors are connection refused or 404 (not found) errors.# Long form wget --tries=number http://example.com/myfile.zip # Short form wget -t number http://example.com/myfile.zipThe

numbercan be specified as0orinffor infinite retries# Long form wget --tries=0 http://example.com/myfile.zip # Short form wget -t 0 http://example.com/myfile.zip

Saving downloaded files with a different name.

The default behavior is to save the file with the name derived from the URL. Using the output file option, you can specify a new name for the file.

# Long form wget -O newfilename.zip http://example.com/myfile.zip # short form wget --output-file=newfilename.zip http://example.com/myfile.zipIn the code above, the file downloaded

myfile.zipwill be saved asnewfilename.zip.However, it is important to note that the use of

-Ois not intended to mean simply “use the namenewfilenameinstead ofmyfilezipas provided in the URL;”. Rather, it is analogous to shell redirection that works like :wget -O - http://example.com/myfile.zip > newfilename.zipnewfilename.zipwill be truncated, and all downloaded content will be saved there.

Continuation of partially downloaded files.

The continue option allows the continuation of a partially downloaded file. This can be a file that was downloaded by another

wgetinstance or another program.# Long form wget --continue http://example.com/myfile.zip # Short form wget -c http://example.com/myfile.zipAssuming there was a partial download with the name

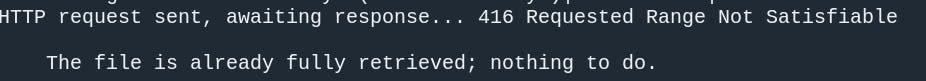

myfile.zip,wgetassumes the local copy is the first portion of the file and attempts to retrieve the rest of the file from the server using an offset equal to the length of the local file. In the event, the file found locally, and that on the server are of equal size,wgetprints an explanatory message

Consequently, should the file on the server be smaller than the one locally, the assumption made is the file on the server is an updated version. However, if the file on the server is bigger than locally due to a new version, the download will continue and the result becomes a garbled file.

The continue option only works with FTP servers and HTTP servers that support the Range header.

Download to a specific folder

To download a file with wget and save it to a specific folder, the -P or --directory-prefix option followed by the path to the directory where files are to be saved are used.

For example:

# Long Form

wget -directory-prefix=/path/to/directory http://example.com/myfile.zip

# Short form

wget -P /path/to/directory http://example.com/myfile.zip

Using proxies

To use a proxy with wget, the --proxy option followed by the proxy URL are used.

For example:

wget --proxy=http://myproxy.example.com:8080 http://example.com/myfile.zip

This downloads the file myfile.zip from http://example.com using the HTTP proxy at http://myproxy.example.com:8080.

If the proxy requires authentication, use the --proxy-user and --proxy-password options to specify the username and password.

For example:

wget --proxy=http://myproxy.example.com:8080 --proxy-user=myusername --proxy-password=mypassword http://example.com/myfile.zip

The --proxy-type option can also be used to specify the type of proxy being used. Valid values include http, socks4, and socks5.

For example:

wget --proxy=http://myproxy.example.com:8080 --proxy-type=socks5 http://example.com/myfile.zip

If all downloads require going through a proxy every time, modify the ~/.wgetrc file. You can do this with nano or your favorite text editor

nano ~/.wgetrc

Add the following lines to the file

use_proxy = on

http_proxy = http://username:password@proxy.server.address:port/

https_proxy = http://username:password@proxy.server.address:port/

Limit download speeds

The --limit-rate option is used to limit the download speed of wget. For example, to limit the download speed to 100KB/s:

wget --limit-rate=100k http://example.com/file.zip

The maximum download speed in bytes per second can be specified by using the B suffix. For example, to limit the download speed to 100KB/s:

wget --limit-rate=100000B http://example.com/file.zip

Extracting multiple URLs

To download multiple files with wget, specify multiple URLs on the command line, separated by spaces.

For example:

wget http://example.com/file1.zip http://example.com/file2.zip http://example.com/file3.zip

This will download the files file1.zip, file2.zip, and file3.zip . If you have a list of URLs in a file, you can use the -i or --input-file option to tell Wget to read the URLs from the file.

For example:

# Long form

wget --input-file=url-list.txt

# Short form

wget -i url-list.txt

Mirror a webpage

To create a mirror of a webpage, you can use the wget command-line utility with the -m or --mirror option. This option tells wget to download the webpage and all the files it references (such as images, stylesheets, etc.), recursively and save them to your local machine.

For example:

# Long form

wget --mirror http://example.com

# Short form

wget -m http://example.com

This downloads the webpage at http://example.comand all the files it references, and save them to the current working directory. The files will be saved in a directory structure that mirrors the structure of the website, with the home page saved as index.html and other pages saved in subdirectories as necessary.

You can also use the -k or --convert-links option to tell wget to convert the links in the downloaded HTML pages to point to the local copies of the files so that the downloaded copy of the website can be browsed offline.

For example:

# Long form

wget --mirror --convert-links http://example.com

# Short form

wget -m -k http://example.com

Extract entire websites

To download an entire website with wget, you can use the --recursive option to follow links and download all the files it finds, recursively.

For example:

wget --recursive http://example.com

Extract the website as Googlebot

To extract a website as if you were Googlebot using Wget, you can use the --user-agent option to set the user agent string to "Googlebot". For example:

wget --user-agent="Googlebot" http://example.com

The website will be downloaded using the user agent string "Googlebot", which tells the server that the request is coming from Google's web crawler. This can be useful if you want to see how Googlebot would render a website or if you want to download a website while bypassing any restrictions that may be in place based on the user agent.

You can also combine options demonstrated, for example:

wget --user-agent="Googlebot" -O example.html http://example.com

Resources: